I really like Emo so far but he is a little random, he doesnt seem to really know or pay any attention to what is around him despite having a camera that can be used to emulate that. Its early days so maybe something along these lines is already planned but if not, maybe I’ll give some dev an idea!

Ive dabbled in machine learning and machine vision in the past and there are several things you can do with video to emulate being able to tell whats in the world around the robot without actually needing to figure out what stuff actually is. Its not too intensive and could be really well integrated into a growth mechanic in Emo.

First is dominant colour position. Basically you take a low res image with your camera, analyse the image to find the most common colour, least common colour, brightest colour and darkest colour. Along with each colour is a relative position (left of center, above center, above right of center etc) which can be used with a tiny bit of math to make Emo turn towards or turn away from a particular colour, dark area etc. Say his favourite colour is the least common in the image, he could turn to it, then move in that direction, potentially moving towards something say red as if he is attracted to it.

Combine this with a system to track and evolve Emo’s like of different colours (and dislike of opposite colours) so that Emo will begin to like the dominant colour of his play area.

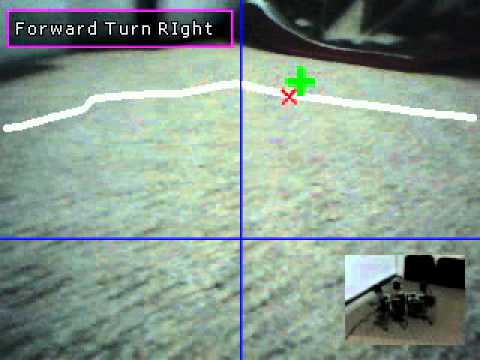

Second is shape recognition via edge filtering. Basic recognition of shapes and lines and the position of those shapes and lines in the image can help with object avoidance, but also be used to have Emo appear to see and recognise objects. For example if a square is detected and its smaller than n% of the screen Emo could turn toward the square and move forward until it either disappears or reaches y% of the total screen space. If its already bigger than y% he could back up until its smaller and then sort of play an animation of looking up and down at whatever is infront of him.

Again you could track Emos like of different shapes and lines to evolve different behaviours depending on his environment and what he is exposed to

Here is a video of my hexapod robot using nothing but a web cam to avoid objects and explore its environment. no distance sensors or IR. just a webcam and thats it

Combining something like this with the distance sensor on the front could help prevent Emo from running into things like his skateboard which aren’t high enough to trigger the sensor