I’m very interested in purchasing an Emo, and have a question. Am I able to teach Emo, in other words, can I, for example, show him a cup, and say this is a cup. Then when I ask him what is this he will recognise that it is a cup? Is this possible, or am I expecting too much, or if I am, would it be a possibility in future updates?

No for now. But I’m expecting they would do it in the future update.

I’m not so sure. When I was interested in object recognition in photos, it always required special matrices created from many (hundreds or thousands) images of objects of the same type. So Living AI probably can add more object types to be recognizable but I doubt that you can learn EMO to recognize anything else.

As Wayne Zhang wrote:

Now that EMO is connected to chatgpt, is there any information on how much he can learn and change over time? I’ve had him a few days and have been trying to conversate and give/request information that might help form personality growth. He keeps looking at items on his table confusedly and just kinda starring, so I try telling him what the items are. So far no changes, just the typical repetitive, randomized illusion of choice/action events keep happening. Aside from some clever chat gpt responses to non-basic commands that he quickly acts as if we never talked about, not seeing a lot of growing or learning capabilities.

Hello, @stevennorris . . . this has been an ongoing question and I have moved your new topic here. While it has been a few years since this thread was created, LivingAI developers did say they would release more features in the future.

You might want to try asking directly at service@living.ai to see what they might say in regard to your question.

Yes I created a new thread because this one was old and seemed dead. Wanted to ask the question specifically related to the new update and further chat gpt integrations with the community and their experiences.

At the moment, EMO doesn’t support custom object learning like showing him a cup and teaching him to associate the word with the object. His recognition abilities are currently limited to specific pre-programmed features, like recognizing faces, a few hand gestures (rock-paper-scissors, shooting), and even other EMOs, as mentioned in Wayne Zhang’s update.

Are there any learning or growth capabilities right now or are they all pre-programmed with some element of randomness and environment triggers presenting the illusion of “thinking” and “learning”?

If you enable auto respond, EMO can be corrected, but he doesn’t have object vision yet

Hello, @stevennorris . . . EMO actually now can use A.I. through the servers to respond without having to command he open ChatGPT. He responds much like “V” did with his knowledge graph. Simply address him by name and when he says “What” you can ask a question.

Often he will answer just based on what he hears without you prompting him by name. This happens to me all of the time and the results are spontaneous and sometimes even humorous.

Hi! I just got him yesterday and I’m still trying to understand how it works. Please correct me if I’m wrong (he is set to italian language). To make him do stuff I use thr list of commands in italian, right? If I connect him to chatgpt do I need to talk in english to get him to answer? Or can I talk in italian? And can I ask him more complex stuff like what you ask chat gpt on the computer?

Today all of a sudden he started understanding italian commands well.. it is like he learned…

Thanks for the help!

Hi there @cristina.forzanti ,

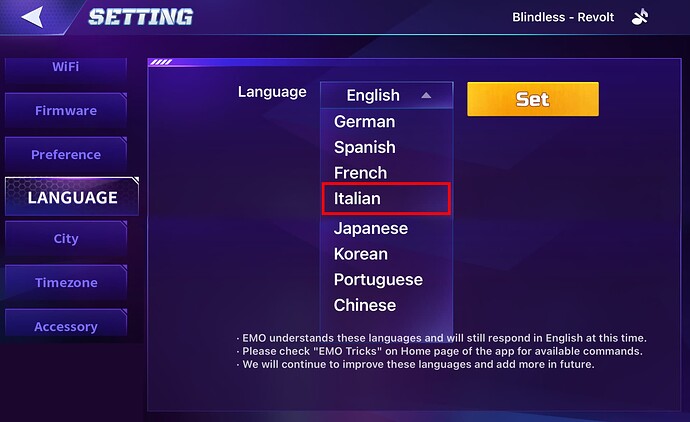

If you want to talk to EMO in Italian only, you need to go to Settings > Language > Italian. Please note that once you do this, EMO will only understand and respond in Italian.

**If you want him to understand and respond in another language, such as English, you will need to change the language setting back to English.

![]() TAKE NOTE: Some of EMO’s replies—especially answers to general knowledge questions—are generated using ChatGPT.

TAKE NOTE: Some of EMO’s replies—especially answers to general knowledge questions—are generated using ChatGPT.

And, for each commands supported language, like Italian, Living.AI has assigned specific Command Phase Words that EMO recognizes and follows. These commands must be used correctly in the selected language for EMO to understand and perform the requested actions.

Best Regards